|

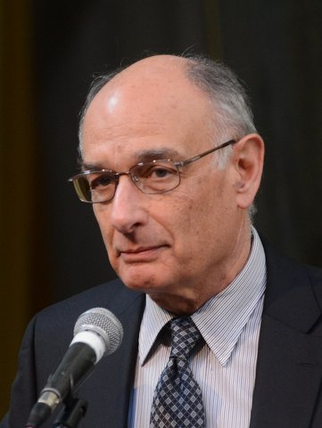

Professor Donald Goldfarb, the Avanessians Professor of IEOR at Columbia, is internationally recognized for his contributions to the field of optimization, and in particular for the development and analysis of efficient and practical algorithms for solving various classes of optimization problems. His most well-known and widely used algorithms include steepest-edge simplex algorithms for linear programming, the BFGS quasi-Newton method for unconstrained optimization, and the Goldfarb-Idnani algorithm for convex quadratic programming. He has also developed simplex and combinatorial algorithms for network flow problems, and interior-point methods for linear, quadratic and second-order cone programming. His recent work on robust optimization for portfolio selection, algorithms for image de-noising, compressed sensing and machine learning is very highly cited. He is a SIAM Fellow (2012), was awarded the Khachiyan Prize (2013) and the Prize for Research Excellence in the Interface between OR and CS (1995) by INFORMS, and is listed in The World’s Most Influential Scientific Minds, 2014, as being among the 99 most cited mathematicians between 2002 and 2012.

|

Abstract

Quasi-Newton Methods: Block Updates, Adaptive Step Sizes, and Stochastic Variants

We discuss several recent variants that we have developed for quasi-Newton methods and in particular for the BFGS method. The primary motivation for these developments is the need to solve optimization problems that arise in machine learning, which because of the enormous amounts of data involved in each computation of the function and gradient, usually require a stochastic optimization approach. The issues that we address in this talk are: (i) the use of sketching (i.e., Hessian actions) and block-updates to incorporate (noisy) curvature information; (ii) the determination of adaptive step sizes to avoid line searches for strictly convex self-concordant functions; and (iii) the development of stochastic BFGS variants for both convex and non-convex stochastic optimization problems. In this talk, several new theoretical results will be presented and illustrated by computational results.

We discuss several recent variants that we have developed for quasi-Newton methods and in particular for the BFGS method. The primary motivation for these developments is the need to solve optimization problems that arise in machine learning, which because of the enormous amounts of data involved in each computation of the function and gradient, usually require a stochastic optimization approach. The issues that we address in this talk are: (i) the use of sketching (i.e., Hessian actions) and block-updates to incorporate (noisy) curvature information; (ii) the determination of adaptive step sizes to avoid line searches for strictly convex self-concordant functions; and (iii) the development of stochastic BFGS variants for both convex and non-convex stochastic optimization problems. In this talk, several new theoretical results will be presented and illustrated by computational results.